Quick Answer

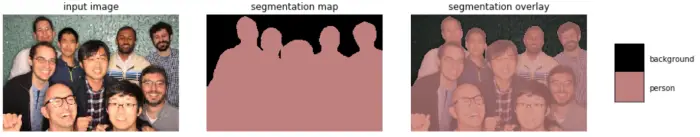

- But the learning code pinpoints the outline of the object so it knows where the object ends and where the background begins, just take a look at the picture below.

- Using this classification, the Google Pixel 2 knows what part of the image is background and which part is foreground so it can add a perfect blur to the background.

- Google released the Google Pixel 2 with an amazing camera and one of the most appreciated features of the camera is the Portrait mode.

Google released the Google Pixel 2 with an amazing camera and one of the most appreciated features of the camera is the Portrait mode. The Portrait mode in Google Pixel 2 is backed by machine learning which learns about how to blur the background in a better way. Today, Google has open sourced the semantic image segmentation which works behind the Google Pixel 2’s single lens portrait mode.

This deep learning semantic labels every pixel in the picture which allows the smartphone to categorize them like road, sky, person or a dog. Using this classification, the Google Pixel 2 knows what part of the image is background and which part is foreground so it can add a perfect blur to the background.

This Pixel 2’s portrait mode cannot add a good depth of effect in pictures using a single lens. But the learning code pinpoints the outline of the object so it knows where the object ends and where the background begins, just take a look at the picture below.

“Assigning these semantic labels requires pinpointing the outline of objects, and thus imposes much stricter localization accuracy requirements than other visual entity recognition tasks such as image-level classification or bounding box-level detection.”

Google said that the accuracy levels we have right now were not possible five years ago, but the software and hardware advancement made this all possible.

“We hope that publicly sharing our system with the community will make it easier for other groups in academia and industry to reproduce and further improve upon state-of-art systems, train models on new datasets, and envision new applications for this technology.”

Previously, when the Google Pixel 2 was launched, people liked the Portrait mode and everyone wants their smartphone to capture portrait pictures like that. If you want the portrait mode on your Nexus smartphone too then follow the steps from here.