Quick Answer

- The open-source nature of DeepSeek allows you to run it locally on any server or computer and access the same benefits and power of its AI models, without sharing anything with external servers.

- When you place a new query on the official DeepSeek app or website, the request is directly shared with its Chinese servers where it processes your prompt to generate a response.

- In this guide, let’s look at three easy ways to use DeepSeek without sending data to China, and use it in an uncensored manner.

- You can use DeekSeep without sending any data to China by running it locally on our computer.

- These methods also let you use an unsensored and unbiased version of DeepSeek that does not favour China in its responses.

DeepSeek has taken the internet by storm with its cost-effective and open-source nature. It rivals OpenAI’s ChatGPT, which is currently the most popular AI chatbot and LLM service available in the public domain. However, the Chinese origin of DeepSeek raises several concerns over data privacy as your prompts and other information are directly shared overseas. In this guide, let’s look at three easy ways to use DeepSeek without sending data to China, and use it in an uncensored manner.

(Also Read: How to Install DeepSeek R1 on PC or Mac, Run Locally)

Does DeepSeek Send Data to China?

DeepSeek is a private company based out of Hangzhou, China, and its servers are also located in China. When you place a new query on the official DeepSeek app or website, the request is directly shared with its Chinese servers where it processes your prompt to generate a response. This is also mentioned in the terms and conditions of DeepSeek, which you have to agree to during sign-up.

The company does not specify the exact type of data which it collects and stores. But coming to the main question, it is true that some part of your data is sent to China when you use DeepSeek.

Multiple users have also reported that DeepSeek favours China for some controversial questions. It is also heavily censored for any criticism related to China’s global affairs, where the chatbot refuses to provide a response. Hence, it gives us one more reason to use an uncensored version of DeepSeek without accessing its Chinese servers.

Can You Use DeepSeek Without Sending Data to China?

Yes, since DeepSeek is an open-source model, it is possible to run it externally without sending any data to China. Let’s understand this from an example.

Consider that I have a camera which you want to use. However, you do not want to share the photos that you click and wish to keep them private. In such cases, you will use your own SD card in that camera, use it, and then simply remove the SD card while returning the camera to me. This way, you can benefit from using my camera, without actually sharing the photos that you clicked.

This is exactly how you can use DeepSeek without sharing any data with its Chinese servers. The open-source nature of DeepSeek allows you to run it locally on any server or computer and access the same benefits and power of its AI models, without sharing anything with external servers.

How to Use DeepSeek Without Sending Data to China

There are multiple ways in which you can use DeepSeek without sharing any information with its Chinese servers. Some methods are free to use, while others need a paid subscription to a certain platform. Let’s explore both.

Method 1: Run DeepSeek Locally On Your Computer Using Ollama

Ollama is a free and open-source tool that allows you to run large language models locally on your computer. It works on Windows, macOS, and Linux. You can also use it to run DeepSeek.

Before we get started, we need to understand its different versions and models. DeepSeek AI is gaining popularity for its deepseek-r1 model, which is competing with OpenAI’s flagship GPT-1o model. However, these are extremely huge LLMs sizing multiple terabytes, and it is practically impossible to run them on a single computer. These models need supercomputers with multiple GPUs to function properly.

Using Ollama, we can run stripped-down versions of the deepseek-r1 model locally on our system. While it may not be as powerful as the full version, it’s more than enough for personal usage with the added benefit of not sharing any data with an external server. Another benefit of this method is that you don’t have to sign up or create a new account, hence your personal data remains totally private. Let’s get started.

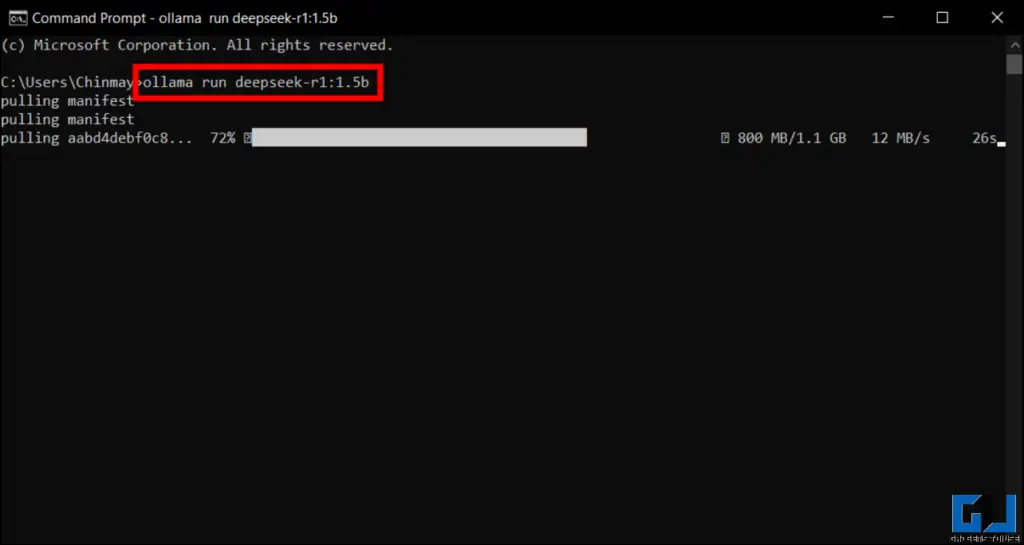

1. Download Ollama from the official website and install it on your computer. We have demonstrated this guide on a Windows computer, but you can also do this on macOS and Linux.

2. Open the Command Prompt on your computer. You can also use Windows Powershell or Windows Terminal.

3. Enter the following command:

ollama run deepseek-r1:1.5b

4. On the first run, your computer will download some necessary files. This is a one-time process. The file size ranges from 1.1GB for our selected model.

Note: For this guide, we are using the basic version of the deepseek-r1 model. If you want better results, you can use more advanced models but the download size of such models can go up to 400GB. You can choose between seven models using the corresponding commands:

| DeepSeek Model | Download Size | Command |

| 1.5b | 1.1GB | ollama run deepseek-r1:1.5b |

| 7b | 4.7GB | ollama run deepseek-r1:7b |

| 8b | 4.9GB | ollama run deepseek-r1:8b |

| 14b | 9.0GB | ollama run deepseek-r1:14b |

| 32b | 20GB | ollama run deepseek-r1:32b |

| 70b | 43GB | ollama run deepseek-r1:70b |

| 671b | 404GB | ollama run deepseek-r1:671b |

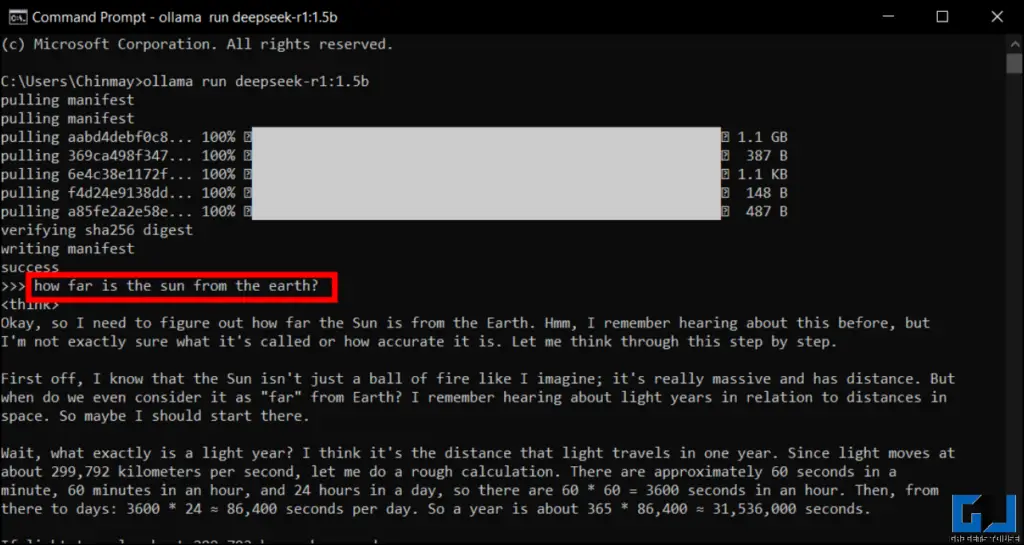

5. Once the download is complete, you can ask any question to DeepSeek just like a chatbot.

This process will install and run DeepSeek locally on your computer. You can access it anytime by using the Command Prompt and entering the command of your corresponding model from the table above. The download is required only once, after which you can use DeepSeek to get answers instantly.

Method 2: Run DeepSeek Using Google Colab

Google Colab is a free tool that allows you to run code and execute programs on a virtual machine which are backed by huge servers by Google. Hence, even if your personal computer is not as powerful, you can run some powerful and demanding tasks. Here’s how.

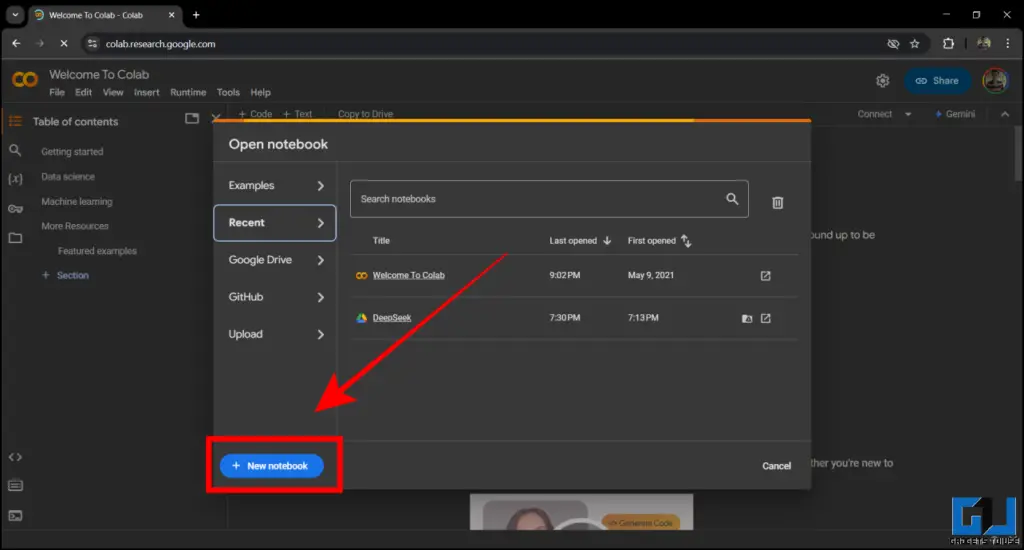

1. Open colab.research.google.com in any web browser and sign-in using your Google account. Click on New Notebook.

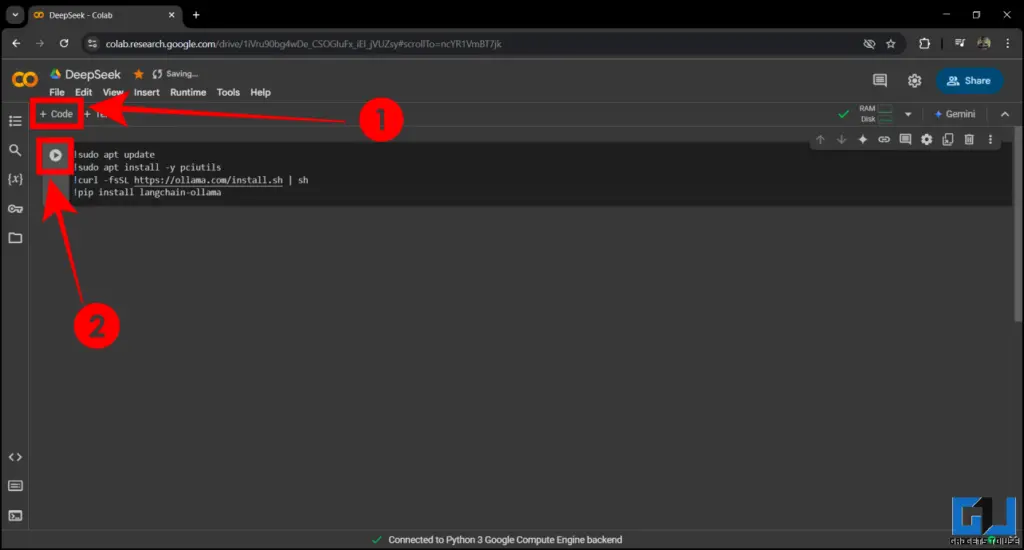

2. Click on the + Code button and paste this code:

!sudo apt update

!sudo apt install -y pciutils

!curl -fsSL https://ollama.com/install.sh | sh

!pip install langchain-ollama3. Click on the Run button on the left side of the code. This step will connect Google Colab to Ollama.

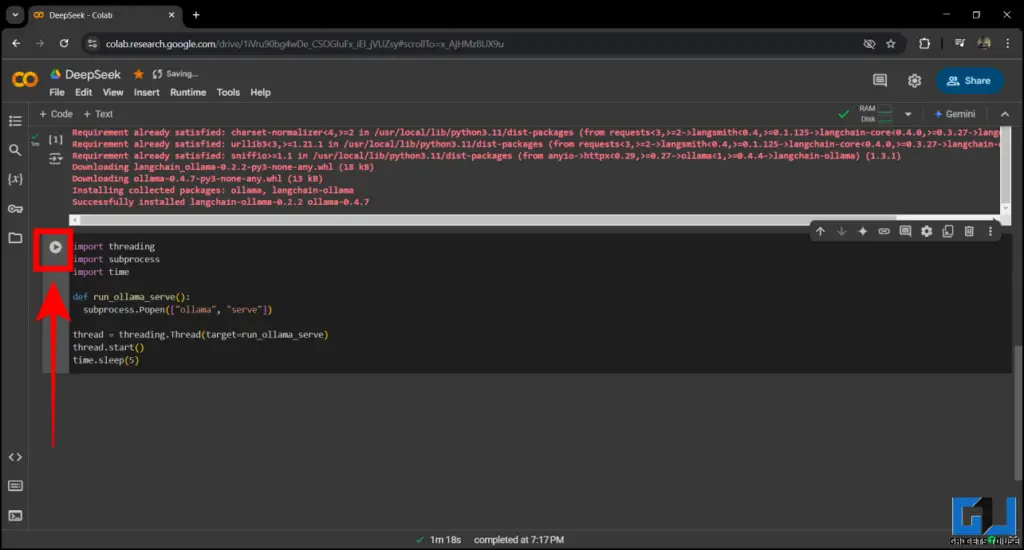

4. Repeat the same process and paste the following code to activate Ollama:

import threading

import subprocess

import time

def run_ollama_serve():

subprocess.Popen(["ollama", "serve"])

thread = threading.Thread(target=run_ollama_serve)

thread.start()

time.sleep(5)

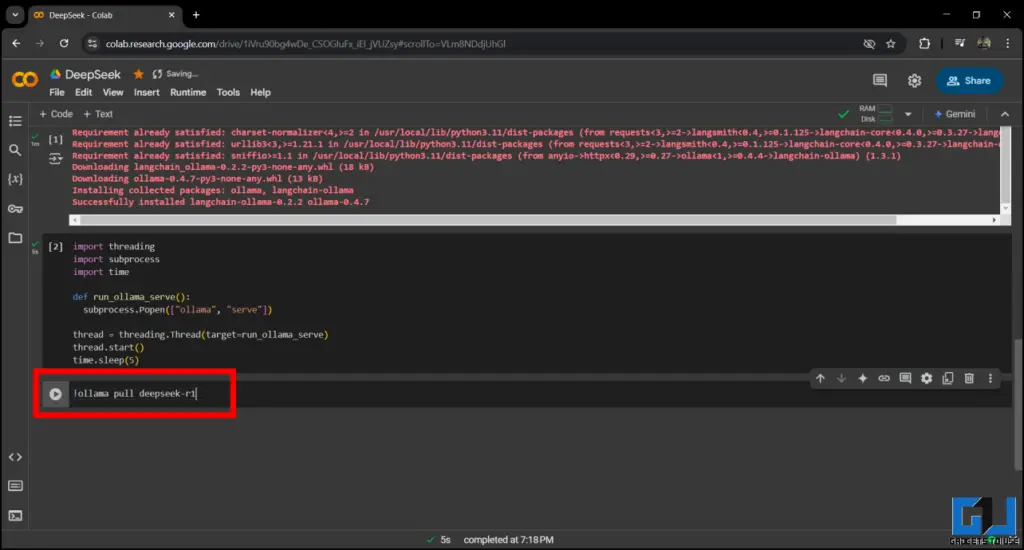

5. Now enter this code to install DeepSeek:

!ollama pull deepseek-r1

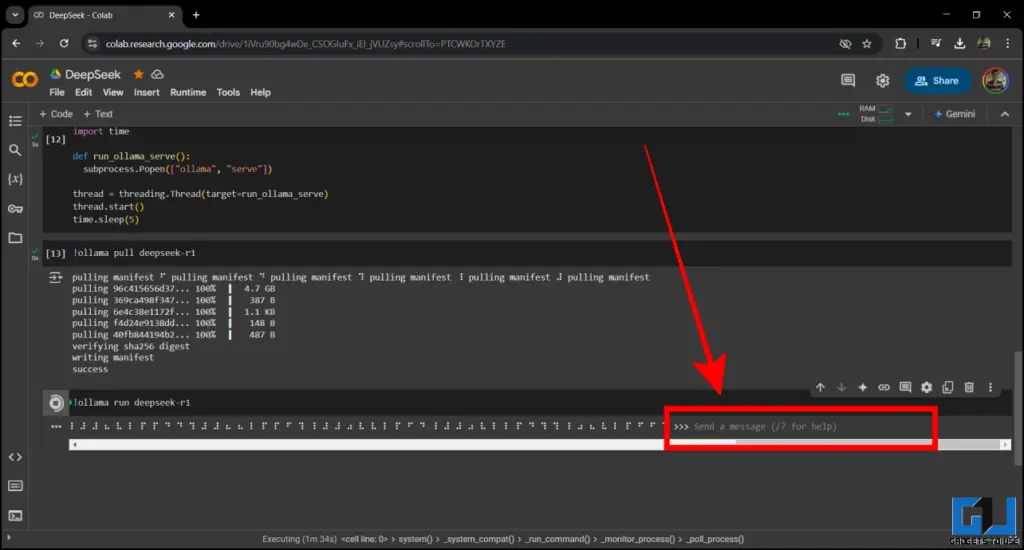

6. Use this code to start running DeepSeek in Google Colab. By default, it will use the deepseek-r1 model.

!ollama run deepseek-r1

7. Scroll to the right till you see Send a message option. Enter any query that you want to ask DeepSeek, and click on the Run Code button on the left side.

DeepSeek will answer your question within few seconds. Note that this method works slower compared to running DeepSeek locally on your computer, as it uses borrowed resources from Google’s servers. You also need to repeat these steps every time when you close and re-open Google Colab.

Method 3: Use DeepSeek in Perplexity AI

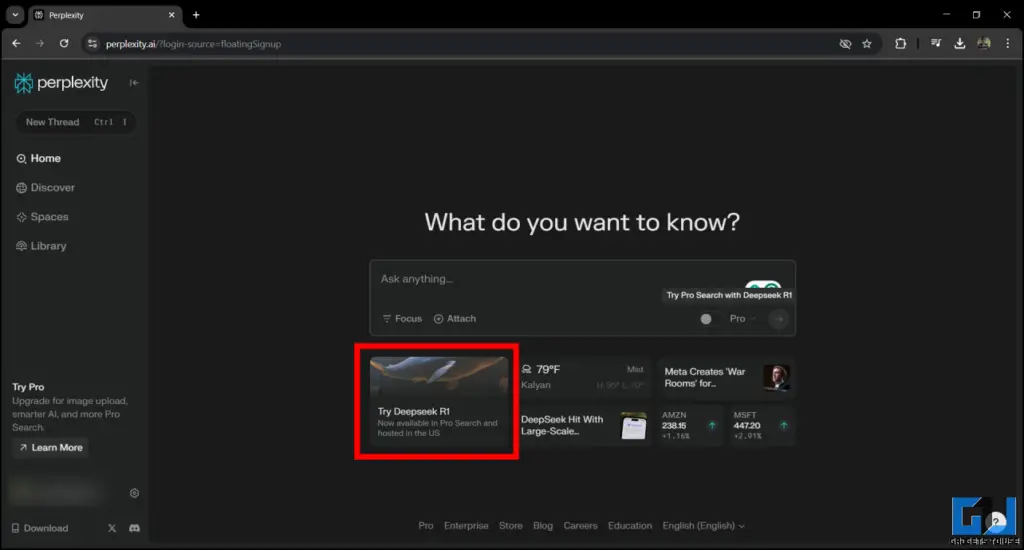

Perplexity AI is a popular US-based company that develops its own large language models. It works similar to OpenAI’s ChatGPT. Perplexity has integrated DeepSeek AI in its Pro tier, which you can access with a $20 monthly subscription.

Although this is a paid method, it has its own advantages. Perplexity is based out of the United States, so your data and information are stored on servers located in the USA. This data is not shared with China. You can access DeepSeek in Perplexity on your desktop, Android, or iOS smartphone.

1. Download and install the Perplexity app, or use the web version in any browser.

2. Choose the DeepSeek R1 option located below the regular search bar.

3. If you do not have a subscription, Perplexity will ask you to pay $20, without which you cannot access DeepSeek.

4. Once your subscription is active, you can access DeepSeek R1 in Perplexity without worrying about your data being sent to China.

Perplexity’s CEO Aravind Srinivas mentions that the DeepSeek integration works without any censorship that is present in the official DeepSeek app and website. Hence, it has the ability to answer some controversial questions, which the original DeepSeek chatbot won’t reply.

Essentially, the DeepSeek you get within Perplexity Pro searches is American. Both in values (no censorship) and in hosting and storage of your data. 🇺🇸🇺🇸🇺🇸

— Aravind Srinivas (@AravSrinivas) January 28, 2025

FAQs

Q. Do I need a dedicated GPU to run DeepSeek locally on my computer?

No, you do not need a dedicated GPU on your computer to run DeepSeek on it. However, having a GPU will improve the response timing and your overall experience of using DeepSeek or any other AI model on your computer/laptop.

Q. Is DeepSeek better than ChatGPT?

Standard industry benchmarks that DeepSeek is on par with ChatGPT in logic and reasoning-based tasks such as coding and mathematics. However, ChatGPT still has an edge in media generation and voice support.

Q. Is it safe to run DeepSeek on my computer?

Yes, it is safe to run DeepSeek on your computer as it only uses your system resources to process your query. It does not share any data with China or any other servers.

DeepSeek: The AI Cold War Between America and China

Within just a week of DeepSeek R1’s release, it has emerged to be the top-downloaded app on the Apple Store, and its popularity is growing faster than OpenAI’s ChatGPT. The lower running cost, open-source nature, and powerful reasoning skills are helping DeepSeek to grow its user base. However, many users have pointed out that it favours China while answering controversial questions. OpenAI can surely feel the heat from DeepSeek, and we are almost witnessing an AI cold war between America and China in the race to achieve AGI (artificial general intelligence).

- I Tried Learning a New Language Using AI Chatbots

- How to solve Math problems using AI for free

- 7 AI Tools to Find the Location Where the Photo Was Taken

- This AI Tool can Edit your Videos in minutes

You can also follow us for instant tech news at Google News or for tips and tricks, smartphones & gadgets reviews, join the GadgetsToUse Telegram Group, or subscribe to the GadgetsToUse Youtube Channel for the latest review videos.